Automating Rules with LogicMaps

The international Rules as Code movement has been around for 6-7 years now, but there is no consensus on exactly what it means, or how to go about achieving it. The nebulous concept is that laws ought to be automated — somehow. The general idea is easy to sign on to, but much harder to turn into practical applications. And without practical applications, there are no reusable templates for other projects to follow.

Rules as Code

We already have rules, so the nominal upshot of the concept is to connect them to the code part. Most early projects agree that having the code part in mind when you are fashioning the rules, makes for better rules. So there is benefit to the process, even if code doesn't automatically come out of the other end. We, of course, agree. So much so that what comes out the other end in our system is an integral part of making the rules better to begin with. It could also be said to succeed, even if the LogicMaps were put in the closet after the natural language version of the rules is published. But surely you have noticed by now that what comes out the other end of our solution looks a lot like the decision trees that constitute the logical backbone of computer code.

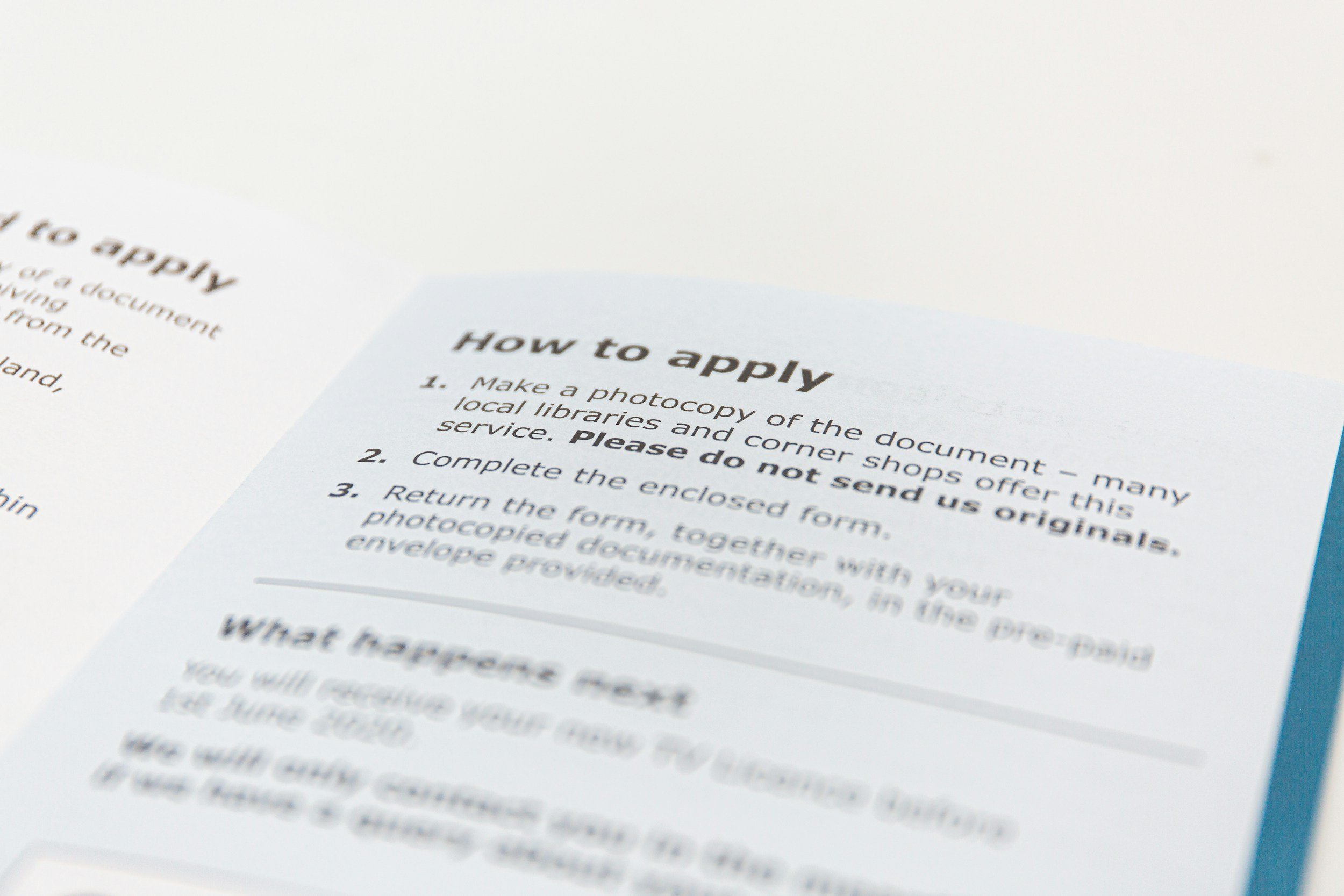

The two paradigm cases of automation that are often cited by the Rules as Code movement are 1) public-facing, interactive benefit eligibility web applications, and 2) automated compliance checking for regulated businesses. In both cases, there is an existing fact scenario -- the applicant's recent life history, and the business's recent transactions in a database -- that must be matched to an application of the rules. The proposed "code" part spans the spectrum from dedicated new languages and "rule engines" that directly execute some form of the rules to do this matching, to "specifications" for automated applications that can be further implemented in the form, language, and system of the implementers' choosing.

One end of the spectrum requires a certain amount of opaque trust by the stakeholders in the novel rules engine. And it requires participants all down the line, from lawmakers to implementers, to acquire a new set of skills. People naturally resist this. There is a cost to acquiring new skills, and becoming dependent on new standards, and this cost will typically be resisted until there is a clear winner in the marketplace to commit to, and some evidence that it will be around for the long haul. But at least you have automation.

The other end of the spectrum is more ecumenical. Specifications are more abstract than code, allowing many different ways to affect the implementation. This lessens the need for new skills, and indefinitely postpones the need to pick winners. But most of the automation step has yet to be accomplished. The opaque trust issue is lessened, but it still must be faced in the form of verifying the eventual applications. Who audits these? How do we know that the code matches the specifications? If an applicant is denied a benefit by the software, to whom can they appeal?

Rules as LogicMaps

Our approach is to generalize Rules as Code to Rules as Consequences. What comes out the back end of the process is more general than code, akin to the specifications end of the spectrum. The LogicMaps leave a lot of room for custom implementation in your language of choice, but not by being more abstract. They are very concrete. And because of that, they specify exactly what must be implemented in code (concerning the application of the rules). No one needs to learn new skills. There will be clear isomorphisms between the LogicMaps and the implementing graphs in the computer code. Programmers know how to do this.

More to the point, everyone can read these specifications, from the lawmakers right down to the end consumer. So the trust issue is significantly eased. A denied applicant can see exactly why he or she was disqualified. The applicant may still feel that the rules as written are unfair, but they will know exactly which of their personal facts were the cause, and can perhaps see what one or two facts would have made the difference.

And no one needs to learn new skills, and that's important if we are to make progress. It is unrealistic to ask lawmakers and lawyers to learn a new language for writing rules. It just won't happen. The laws will continue to come out in natural language, as before. The "new" language, in our system, is an old one, already known by logicians. The translation from natural language to this formal language is done behind the scenes by the LLM. That's how we get to LogicMaps automatically. The formal logic language is just an invisible way station on the road from rules to consequences. Neither the initial rule-makers nor the ultimate coders need to learn it, or even be aware of it.

LogicMaps as Code

There remains the small issue of verifying that the implementing software for benefit applications and compliance checking faithfully matches the LogicMaps. The issue is small because because the conceptual gap between LogicMaps and the if-then-else statements in code is small. The diagrams already mimic the kind of low-level design documents that programers code directly from. Still, programmers make mistakes. Software has bugs. But verifying the correctness of the LogicMap implementations is considerably easier than for software generally. That's because two verification methods come into play that are not practical for most software.

One is called correct by construction. In applications that involve consumer safety, or national security, or have a very high cost of failure such as computer chip fabrication, the sponsoring agency has the resources to have the code derived directly from formal specifications, eliminating the possibility of human errors. The deriving software itself must be verified, of course, but this only needs to happen once. Then every derivation inherits the provenance of this verification.

Although the human-facing output of our process is a set of annotated drawings (the LogicMaps), the logical output (behind the scenes) is a formal, logical term representing a graph. This graph can be used to automatically generate the implementing software (web portal or database program) in much the same way that operational websites are automatically generated by drag and drop design tools. Much of the code for these websites is pre-written and tested in the form of templates. The template is then specialized with custom images and text, but the layout and backbone navigation has already been implemented. Similar templates can be pre-written (and tested/verified) for the typical legal applications, parameterized around a general decision graph. Adding the actual graph for a set of consequences then converts it into a finished, customized application.

The other verification method is good old fashion testing. Run representative test cases and check that the results are correct. The problem with software testing is that most programs have infinite consequences, so the testing is only as good as the coverage of the test cases. Legal rules, as we've pointed out before, have finite consequences — manageably small finite consequences. So the LogicMaps cover all of the consequences. They are a specification of a universal set of test cases for any software that purports to implement them.

LogicMaps Instead of Code

The point of automating the law is to make the application of rules more equitable and more efficient. Sometimes these two goals conflict. The most efficient application of the rules would take humans out of the loop entirely — both the administrator and the consumer. A black box that says "you're in" or "you're out." Case closed. There is a burgeoning fear that this sort of thing is going to happen with AI, if it's not happening already. Both the consumer, and the erstwhile administrator, would like to know why decisions are being made by the software.

This human interest in why is behind recent legislation in the EU to require black box systems that make autonomous decisions about consumers to incorporate a degree of transparency. Such software decisions must be traceable, providing a human-understandable explanation of the decision. Long before we had automation, though, we have faced a similar inscrutability with the laws and regulations themselves. A consumer under pressure to hit 'I agree' to a 20 page document of legalese in order to download software or subscribe to a service, has no chance of understanding the why of it. But by turning rules into their consequences, we attack both problems with a single solution. Explaining what the law means and explaining how its automated application is arrived at become the same thing.

Any code that is produced downstream of the LogicMaps that takes the administrator and the consumer out of the loop purports to be automating the LogicMap itself. The why is in the maps. If the software reaches a different decision, it is wrong! So the possible decisions are explainable before they even become automated. This allows automation to proceed without sacrificing equitability. It also promotes equitability even if no subsequent code is produced. By publishing the LogicMaps along side the rules, consumers, lawyers and adjudicators can assess the application of the rules the old fashioned way — by reading and thinking.

So although Rules as LogicMaps paves the way for Rules as Code, the maps are themselves an end product.

Retroactive Rules as LogicMaps

There are an awful lot of laws and regulations already out there that are too late to be improved by this technology during the drafting phase. And let's be honest, the vast majority of laws yet to be written will not use this technology either. even if it is wildly successful. But there is nothing stopping this technology from being applied retroactively to legacy rules. The text of laws and regulations are now largely in the public domain. Anyone can access them. So pairing them with their LogicMaps can be pursued as a public service, by anyone so inclined.

Trusting Legal Software

Software to automate legal rules is fairly new. There are new rule languages and software tools that attempt to directly emulate "legal reasoning" including counterfactuals, rules overriding other rules, and default assumptions. The aim here is to provide formal languages that look very much like the existing rules written in legalese, hoping that the apparent isomorphisms will ease the transition from informal to formal documents, and thus foster trust concerning these proposed solutions.

That is importantly not what we are offering with LogicMaps. The software that we are offering is not legal software. It is logic software. There is nothing specifically "legal" about it. It can be applied to any system of rules, about any subject matter, with finite consequences. The form in which we represent the rules to derive the consequences is a well-known system of logic that has been around for about 170 years. The decision procedure for that logic, which we encode in LogicMaps, as been around for about 65 years. So the formalism and its accompanying software "calculator" already have a long vetting history. We know what this software does and how it does it. It has its own, independent proofs of correctness. What's new, in our offering, is the combination of three existing technologies that have, until now, largely been used for different purposes: one to prove the consequences of formal rules, one to optimize expressions in the Boolean algebra (to produce better electronic switching circuits), one to translate between different languages. So there isn't much about this software that you have to trust. It treads well-known paths of well-known logic.

Because of that, it has a very specialized use — deducing the consequences of logical rules. It deals exclusively with the consequences of stringing sentences together with 'if', 'and', 'or' and not'. When you string these sentences together with quasi-logical phrases such as 'notwithstanding' and 'unless the contrary is shown' and 'if the postulated events had been true', it is the LLM that coerces them into the primitive Boolean operators.

There has been quite a bit of commentary recently concerning fears about Rules as Code usurping the authority of lawmakers, by putting the application of laws into the hands of technologists with inscrutable software. How are we to trust this software if the traditional authorities cannot verify it? Our solution side-steps this entire issue. Rules are translated directly into human readable decision procedures — the LogicMaps. Trust is anchored there.